Duplicate Content: how to find and fix

8 July 2016 Leave a comment ON-PAGE SEO

Despite that Google continues to insist there is no penalty for duplicate content, everyone should understand it like this: “yes, your website won’t lose its positions, it just won’t get them.” Essentially, it means duplication remains to be a problem, anyway.

Duplicate content it is a presence of identical or similar blocks of content within or across domains. It can cause a lot of troubles for any website, starting from the stagnation in ranking’s growth and ending up with even the de-indexing of website pages. Although according to Google, there is no such a thing as a penalty for duplication, in the situation when the Search Engine suspects the manipulating of search results through duplicates use, it acts entirely predictably and makes a particular website lose its positions.

The easiest way to avoid any duplication-related issues is to prevent them from appearing, of course. Thankfully, there are clear tips right from Google itself on how to do that.

Avert duplication using the following tips:

1Reduce pages’ similarity. A lot of similar content on numerous site pages is a common problem of travel websites, for example. One of Google suggestions is to consolidate similar pages into one, which is, frequently, not an option at all. The most optimal solution is to reduce similarity through increasing a uniqueness of pages content. Alternatively, you can add interesting information on each of the similar pages telling some unique facts about one of the subjects that are present in a text.

2Check the CMS (Content Management System) you use. Unfortunately, it is a common practice to trust CMSs more than it is necessary. You should always check the display of data you’ve entered. The same content may behavior in multiple formats. A website blog is the best example because its entry is duplicated on a home page of a blog and in an archive page pretty often. So, keep an eye on that.

3Boilerplate. It is a repeated non-content across web pages. For example, website navigation (home, about us) or some particular areas (blogroll, navbar). Opinions on boilerplate use differ. Google insists it is a terrible practice but, also, there are a lot of statements about Google ignoring such things.

4Syndicating. When syndicating your content on other sites, you should take into account that Google will pick up a version it considers the most appropriate to display to a user. And it is not necessarily your version. So, make sure to request each site on which your content is syndicated at a link back to your original article or ask them to use the noindex meta tag.

5Use 301 redirects if you have restructured your website.

To follow the given hints is a great thing, of course. But if you’ve just got on a board and have no clue how to find out if your website has duplicate content which, as a result, may affect your site’s ranking, for a start, you need to discover how to detect this possible trouble.

Kinds of duplication

If you are not familiar with duplication issues, it should be pointed out that it’s not just about the content itself, by the way. Assuming that you use different tools to check your website for duplicates, some extra information on that point can stand you in good stead. There are a few pages elements that can be duplicated:

- Content. It is the first thing that occurs to one’s mind when hearing about duplication. The similar or identical blocks of a text present on different pages of a website.

- Meta duplication. Strange but frequently made kind of mistake. Here we are talking about two forms of meta duplication:

- The first one is a duplication of any meta tag’s content on different site pages.

For example, if you have the same meta description on two or more pages. - The second is a duplication of meta tags themselves. For example, if you have two meta description tags on a single page.

- The first one is a duplication of any meta tag’s content on different site pages.

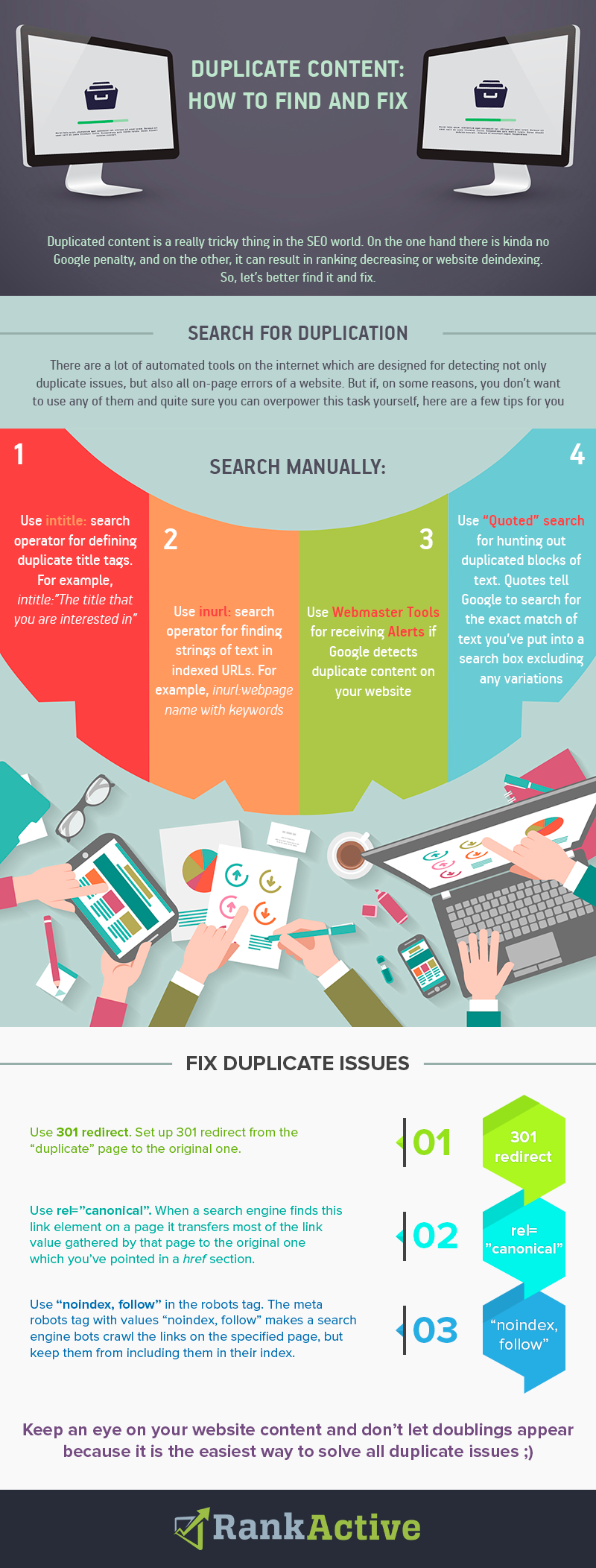

Search manually

Before starting to explore the Internet looking for some automated tool you can try to check own website for duplication by yourself. Here are four ways to conduct such a check:

- intitle:

This search operator helps you to find duplicate title tags. All you need is the search box. Paste there the title that you are interested in. In just a second you will get the results. Some of them will seem quite reasonable, and others may be nothing but total scraping. The last ones are those you should be concerned about. - inurl:

The operator search for strings of text in indexed URLs. It is used in the same way as the previous search operator is. Like this, inurl:webpage name with keywords. As usual, automated scraper sites copy each element of a domain, including the URL structure. And because of such behaviour, you can easily detect this scrapped content.

As usual, automated scraper sites copy each element of a domain, including the URL structure. And because of such behaviour, you can easily detect this scrapped content. - Webmaster Tools Alerts

Reasonably controversial option, I should say. But can be helpful sometimes. If you set up a verified Webmaster Tools account, Google will notify you with an internal message that a duplicate content issue has been found. - “Quotes”

It is quite possible that you have already known about this method of duplication search. But if not, I can assure you that it is the most widely used and simple one. Just copy a part of the text you are interested in and paste it into the search box. The thing is quotes should surround that text. It is done for Google to display the results which exactly match your “query” excluding the variations.

Automation

Pity that the results which are found manually are too sketchy and time-consuming, and the check of a website on all forms of duplication is also essential. Thus, users are forced to look for some automated tool which can provide permanent and comprehensive website audit. A couple of words on why an automated tool is better in comparison with the manual search. A website checkup should be conducted on the consistent basis. In fact, you have to do it every day. And it is not a secret that any website has a tendency to become bigger because pages quantity of which it consists grows all the time. Eventually, the possibility to manually control duplicate issues comes to the zero point.

That is where an automation is the only way out. With RankActive’s Site Auditor, you get the on-page checkup for all the pages of your website. What’s more important, you’ll be notified immediately if the issue is detected, so you’ll be able to fix it on time.

Getting rid from duplicates

So, the full website audit is done and duplicate issues are revealed. What’s next? Let’s fix this.

Methods to fight duplication:

1Redirect. Set up 301 redirects from “duplicate” page to the original one. It does not only create a stronger relevancy and popularity signal for all of those pages but also allows them not to compete with one another any longer. It happens because they are combined into a single page through the used redirection.

2Canonical. Although the 301 redirect is better because of the faster action, the rel=”canonical” tag is also commonly applied thing. The operating principle is practically the same as in the case with 301 redirect use. When a search engine finds this link element on a page, it transfers most of the link value gathered by that page to the original one which you’ve pointed in a href section.

3Noindex, follow. The meta tag with values “noindex, follow” makes search engine bots crawl the links on the specified page, but keep them from including them in their index. Thus, since unwanted pages are not indexed, they are not a problem anymore.

In conclusion

No matter what Google says, it can and will punish a website for duplicate content if it considers that you are trying to manipulate rankings and deceive Internet users. To minimize such a possibility always pay close attention to your website content. Do as much as possible not to let any doublings appear, regularly check your site on duplicate issues (better automatically) and fix them if they are detected without delay.

Embed This Image On Your Site (copy code below):

Tags: